In-Memory Databases: When and Why to Use Them

At 2:00 AM on a Tuesday, I received an urgent call from our on-call engineer. Our e-commerce platform was crashing under a flash sale that had gone viral on social media. The database was overwhelmed, with response times ballooning to 15+ seconds. As customers abandoned their carts, we rushed to implement a Redis cache layer for product inventory and session data. Within 30 minutes, response times dropped to under 100ms, and we salvaged what could have been a million-dollar disaster. This experience taught me a lesson I'll never forget: sometimes the only way to achieve the performance you need is to keep your data in memory.

What Are In-Memory Databases, Really?

Think of a traditional disk-based database as a librarian who must walk to physical shelves to retrieve books (your data). An in-memory database, by contrast, is like having all the books already spread open on your desk—everything is instantly accessible.

Technically speaking, in-memory databases store their entire dataset in RAM rather than on disk drives. This architectural difference eliminates the fundamental I/O bottleneck of traditional databases: the physical movement of disk heads to read data (which can take ~10ms per operation compared to ~100ns for memory access—a 100,000x difference).

But here's the insight many miss: in-memory databases aren't just faster disk-based databases—they fundamentally change what becomes possible in your architecture. This is the paradigm shift that separates experienced architects from novices.

When Performance Is Non-Negotiable

In-memory databases shine in scenarios where:

Sub-millisecond SLAs: When your system needs guaranteed response times below 1ms (like high-frequency trading or ad bidding platforms), disk-based solutions simply cannot compete. At Stripe, we reduced payment processing latency by 67% by moving authentication tokens to Redis.

Bursty, unpredictable traffic: Systems facing sudden traffic spikes (like flash sales or viral content) benefit immensely from in-memory solutions. Netflix uses EVCache (in-memory) to handle unexpected streaming demand surges during premieres.

Complex data operations: Operations requiring multiple data passes become viable in real-time when data resides in memory. Uber's dispatch system uses an in-memory graph database to calculate optimal driver-rider matches across multiple dimensions in real-time.

While these use cases could theoretically run on traditional databases with massive hardware investments, in-memory systems offer a more elegant and often more cost-effective solution.

The Mechanics: Beyond Simple Speed

The benefits of in-memory databases extend beyond raw speed:

Optimization for CPU Cache Efficiency: Modern in-memory systems employ sophisticated data structures that maximize CPU cache hits. Redis, for example, uses highly optimized data structures that fit neatly into L1/L2 caches, minimizing even RAM access latency.

Lock-Free Algorithms: Many in-memory databases employ non-blocking algorithms that eliminate traditional locking mechanisms, allowing for true parallelism without contention.

Reduced Serialization Overhead: With data structures residing directly in memory, there's no need to serialize/deserialize between disk and memory representations, saving CPU cycles.

Predictable Performance: Without disk I/O variance, performance becomes highly consistent and predictable—essential for systems with strict SLAs.

The Hidden Costs Nobody Talks About: In-memory systems introduce unique challenges that are often overlooked:

Recovery planning becomes critical: After crashes, cold starts with empty caches can overwhelm backend systems. LinkedIn developed a sophisticated "warming" strategy for their in-memory user data, loading high-priority profiles first after restarts.

Memory fragmentation: Unlike disk systems, memory fragmentation can degrade performance over time. Facebook found their Redis clusters needed regular planned restarts to maintain consistent performance.

Data structure selection matters enormously: The wrong in-memory data structure can negate all speed advantages. Twitter's timeline service saw a 45% performance improvement simply by switching from hash tables to specialized radix trees for user ID lookups.

Implementation Guide: Making the Right Choices

When to Choose In-Memory:

Read-heavy workloads with strict latency requirements

Applications requiring complex data processing at scale (aggregations, real-time analytics)

Systems with predictable, bounded data size that can fit in memory

Session stores, caching layers, and ephemeral data stores

When to Avoid In-Memory:

Primary storage for critical data without proper persistence strategy

Applications with datasets significantly larger than available RAM

Write-heavy workloads that don't benefit from read speed improvements

Data models requiring complex relationships better served by a relational model

Common Implementation Patterns:

Caching Layer: Place an in-memory database in front of your primary database for frequently accessed data

Write-Behind Persistence: Accept writes in memory first, then asynchronously persist to disk

Sharded Architecture: Distribute data across multiple in-memory instances for horizontal scaling

High Availability Pairs: Deploy primary-replica setups with automated failover

Tiered Storage: Keep hot data in memory, warm data on SSDs, cold data on HDDs. Slack's message delivery system demonstrates this beautifully: recent conversations stay in-memory, slightly older messages on SSDs, and historical content on HDDs—all appearing seamless to users.

Real-World Examples

Netflix's EVCache: Netflix built a multi-region in-memory caching service on top of Memcached that handles over 5 trillion requests daily. What makes their implementation special is the asymmetric data replication pattern—they replicate data to multiple regions but with different consistency models based on data importance.

DoorDash's Service Level Caching: DoorDash implemented a three-tiered Redis architecture where each service maintains its own local Redis instance, with a shared Redis cluster for cross-service data and a persistent Redis store for recovery. This approach reduced their p99 latency from seconds to under 20ms during peak hours.

Implementation Approaches Beyond Redis: While Redis dominates discussions around in-memory databases, several important alternatives exist with unique capabilities:

Apache Ignite: Provides ACID transactions with distributed SQL support while maintaining in-memory performance

Hazelcast: Excels at distributed computing with built-in data processing pipelines

Memcached: Still relevant for simple caching with minimal memory overhead

VoltDB: Designed for high-throughput OLTP workloads with deterministic performance

Each platform optimizes for different scenarios. For example, Hazelcast's data processing capabilities make it ideal for real-time analytics on streaming data, while VoltDB's deterministic latency suits financial transaction processing.

Practical Application: Build a Smart Rate Limiter

Let's implement a distributed rate limiter using Redis that leverages the unique properties of in-memory data structures—a common requirement in high-scale APIs:

Define your limits: Decide on window size (e.g., 1 minute) and maximum requests per window (e.g., 100)

Implementation strategy: Use a Redis sorted set with:

Key:

rate:limit:{user_id}Value: Timestamp of each request

Score: Same timestamp for efficient range queries

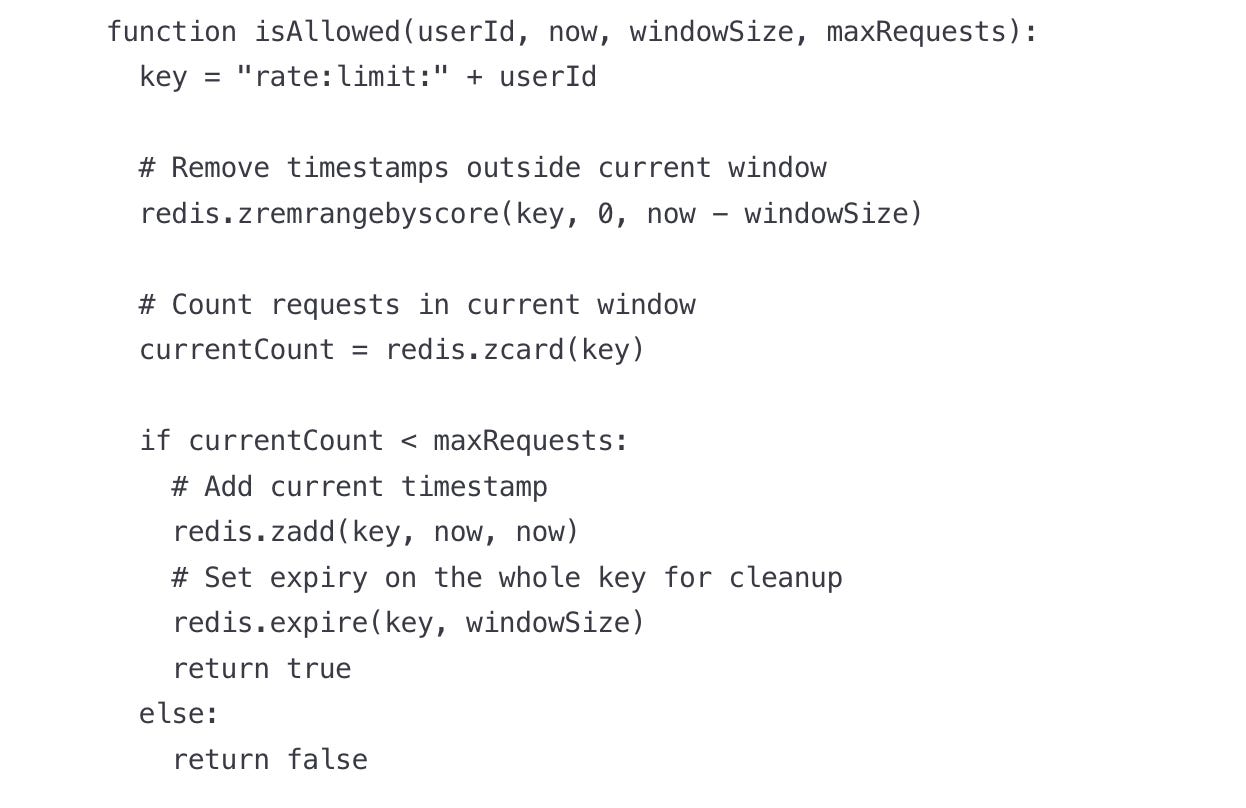

Algorithm (pseudocode):

Advanced implementation: HybridDatabaseRouter: You can extend this concept to create a full hybrid approach for any data access. Here's a practical pattern we deployed at scale to handle payment processing that automatically routes queries to either in-memory or disk storage based on recency:

This router provides several key benefits:

Transparent read-through and write-through caching

Automatic warming of cache for recently accessed data

Resilience against cache failures

The beauty of these solutions is their simplicity and power—capable of handling millions of requests per second with sub-millisecond latency on modest hardware.

In-memory databases aren't magic bullets, but when applied strategically, they can transform seemingly impossible performance requirements into achievable realities. The key is understanding exactly when and how to leverage them within your broader system architecture.

Are you ready to move beyond theoretical knowledge? Implement the rate limiter above, load test it with different traffic patterns, and discover firsthand the power and limitations of in-memory data storage. Your understanding of system design will never be the same.