Introduction to Load Balancing

The Problem of Popularity

Imagine you've just launched a promising new web application. Perhaps it's a social platform, an e-commerce site, or a media streaming service. Word spreads, users flood in, and suddenly your single server is struggling to keep up with hundreds, thousands, or even millions of requests. Pages load slowly, features time out, and frustrated users begin to leave.

This is the paradox of digital success: the more popular your service becomes, the more likely it is to collapse under its own weight.

Enter load balancing—the art and science of distributing workloads across multiple computing resources to maximize throughput, minimize response time, and avoid system overload.

What Exactly is Load Balancing?

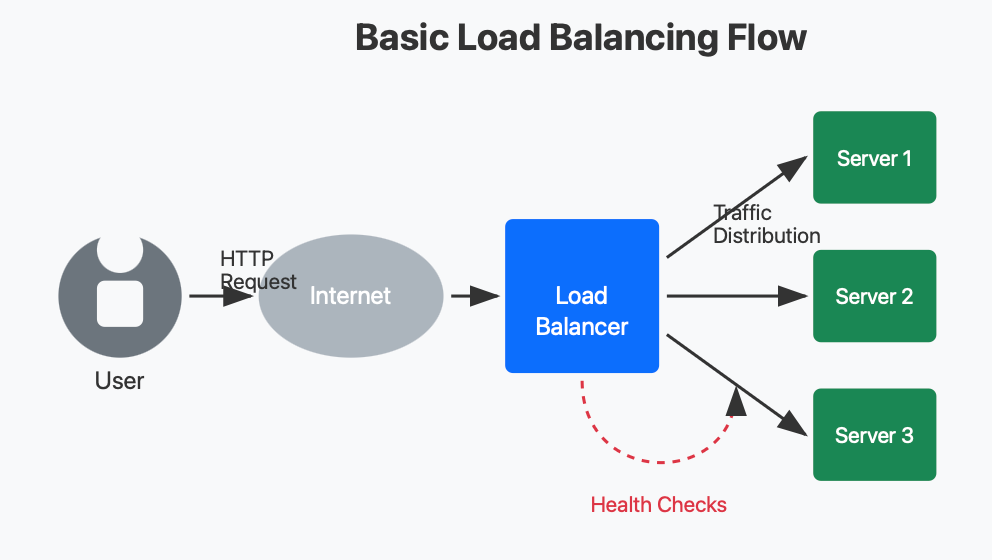

At its core, load balancing is a traffic management solution that sits between users and your backend servers. When a user requests access to your application, the load balancer intercepts that request and directs it to the most appropriate server based on predetermined rules and real-time server conditions.

Think of it as an intelligent receptionist at a busy medical clinic. As patients arrive, the receptionist doesn't simply send everyone to the same doctor. Instead, they consider which doctors are available, their specialties, current workloads, and even patient preferences before making an assignment. The goal is to ensure patients receive timely care while preventing any single doctor from becoming overwhelmed.

In technical terms, load balancing achieves three critical objectives:

Service Distribution: Spreads workloads across multiple servers

Health Monitoring: Continuously checks server status and availability

Request Routing: Directs traffic according to optimized algorithms

The Traffic Flow: How Load Balancing Works

To understand load balancing, let's walk through what happens when a user accesses a load-balanced website:

Request Initiation: A user types your domain name (e.g., www.yourservice.com) into their browser or clicks a link to your application.

DNS Resolution: The domain name resolves to the IP address of your load balancer (not your individual servers).

Load Balancer Reception: The load balancer receives the incoming request.

Server Selection: Based on its configured algorithm, the load balancer selects the most appropriate server to handle the request. This might be the server with the fewest active connections, the fastest response time, or simply the next server in a rotation.

Health Check Verification: Before forwarding the request, the load balancer verifies that the selected server is operational through health checks.

Request Forwarding: The load balancer forwards the request to the chosen server.

Response Processing: The server processes the request and sends a response back to the load balancer.

Client Delivery: The load balancer returns the response to the user's browser.

This entire process happens in milliseconds, creating a seamless experience for the user who remains unaware of the complex orchestration happening behind the scenes.

The Benefits of Load Balancing

Load balancing offers numerous advantages that extend far beyond simply handling more traffic:

1. High Availability (HA)

Perhaps the most critical benefit of load balancing is ensuring your service remains available even when individual components fail. If a server crashes or becomes unresponsive, the load balancer automatically redirects traffic to healthy servers, preventing service interruptions.

Real-world impact: For e-commerce platforms, this could be the difference between a successful Black Friday sale and millions in lost revenue due to site crashes.

2. Scalability

As your user base grows, load balancing allows you to add more servers to your infrastructure without architectural changes. Simply deploy new servers, add them to the load balancer's server pool, and they'll start receiving traffic.

Real-world impact: Netflix can instantly scale up server capacity when a popular new show releases, handling millions of simultaneous streams without degradation in quality.

3. Redundancy

Load balancers eliminate single points of failure in your infrastructure. With multiple servers handling the same workloads, the failure of any individual component won't bring down your entire service.

Real-world impact: Financial services can maintain 99.999% uptime (less than 5.26 minutes of downtime per year), essential for critical banking operations.

4. Flexibility and Maintenance

With load balancing, you can perform maintenance on individual servers without taking your entire service offline. The load balancer simply stops routing traffic to servers undergoing maintenance.

Real-world impact: SaaS providers can roll out updates during business hours without disrupting customer operations.

5. Enhanced Security

Many modern load balancers include security features like SSL termination, DDoS protection, and application firewalls that can filter out malicious traffic before it reaches your application servers.

Real-world impact: E-commerce sites can better protect sensitive customer data and maintain PCI compliance.

Load Balancing Algorithms: The Decision Makers

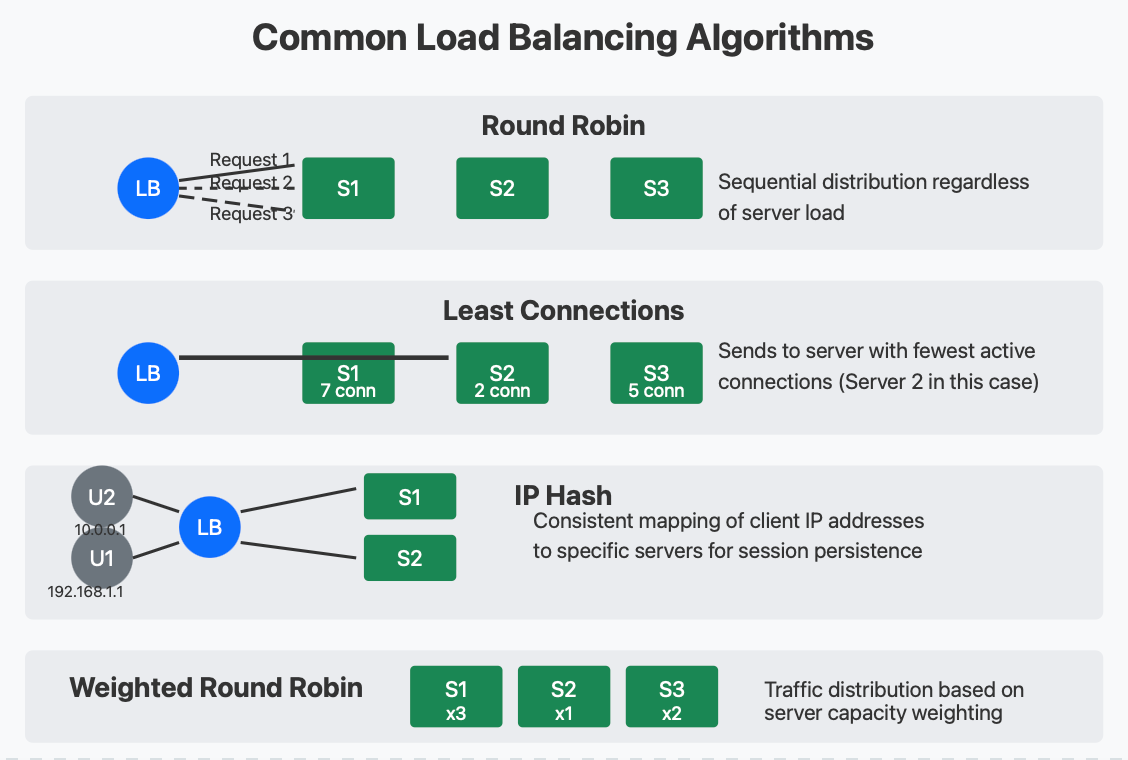

The intelligence of a load balancer lies in its algorithm—the logic it uses to decide which server should handle each incoming request. Let's explore some common algorithms:

Load Balancing Algorithms Visualization

1. Round Robin

How it works: The load balancer cycles through the list of servers in sequence. The first request goes to server 1, the second to server 2, and so on, cycling back to the beginning once all servers have been used.

Best for: Simple implementations where all servers have equal capacity and the request workload is fairly uniform.

Limitations: Doesn't account for varying server capabilities or request complexity.

2. Least Connections

How it works: The load balancer tracks the number of active connections to each server and routes new requests to the server with the fewest active connections.

Best for: Environments where requests may take varying amounts of time to process or where servers have different processing capabilities.

Limitations: Connection count alone doesn't always accurately reflect actual server load.

3. IP Hash

How it works: The load balancer creates a hash based on the client's IP address and consistently maps that hash to a specific server.

Best for: Applications that require session persistence, where the same user should consistently connect to the same server.

Limitations: Can lead to uneven distribution if many users share IP addresses (e.g., behind corporate firewalls).

4. Weighted Methods

How it works: Administrators assign different weights to servers based on their capacity. Servers with higher weights receive proportionally more traffic.

Best for: Heterogeneous environments with servers of varying capabilities.

Limitations: Requires manual configuration and adjustment as traffic patterns change.

5. Response Time

How it works: The load balancer monitors how long each server takes to respond to requests and favors the fastest-responding servers.

Best for: Performance-critical applications where minimizing latency is crucial.

Limitations: May cause oscillation as servers become slower when more heavily loaded.

6. Random with Two Choices

How it works: The load balancer randomly selects two servers and then chooses the one with fewer connections.

Best for: Large-scale deployments where checking all servers would be inefficient.

Limitations: Not as precise as checking all servers, but still effective and more scalable.

Types of Load Balancers

Load balancers come in different forms, each with its own strengths:

Hardware Load Balancers

Physical appliances specifically designed for load balancing.

Pros:

High performance

Purpose-built hardware

Often include specialized ASICs for SSL acceleration

Cons:

Expensive

Fixed capacity

Physical maintenance required

Popular products:

F5 BIG-IP

Citrix ADC (formerly NetScaler)

A10 Networks Thunder ADC

Software Load Balancers

Software applications that run on standard operating systems.

Pros:

Cost-effective

Flexible deployment options

Easy to scale

Cons:

Performance depends on underlying hardware

May require OS maintenance

Popular products:

NGINX Plus

HAProxy

Apache Traffic Server

Envoy

Cloud Load Balancers

Managed load balancing services provided by cloud platforms.

Pros:

No infrastructure to maintain

Pay-as-you-go pricing

Seamlessly scales with demand

Cons:

Less control over configuration

Potential vendor lock-in

Popular products:

AWS Elastic Load Balancing

Google Cloud Load Balancing

Azure Load Balancer

DigitalOcean Load Balancers

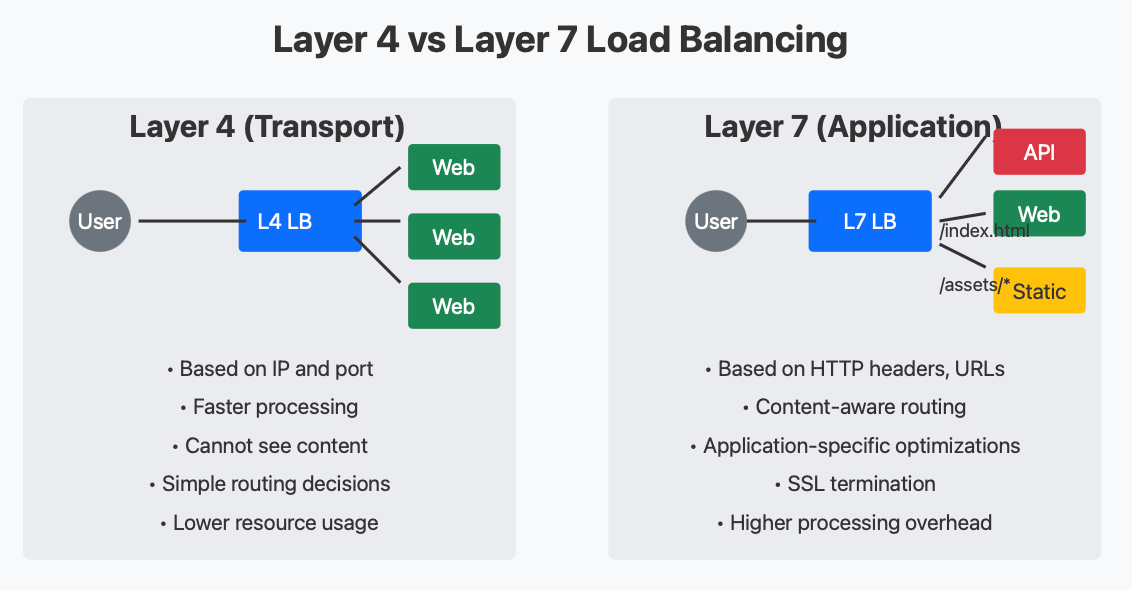

Layer 4 vs. Layer 7 Load Balancing

Load balancers operate at different layers of the OSI network model, with important distinctions:

Layer 4 vs Layer 7 Load Balancing

Layer 4 Load Balancing

Operating at the transport layer (TCP/UDP), Layer 4 load balancers make routing decisions based on network information without inspecting the content of the packets.

Key characteristics:

Fast and efficient processing

Makes decisions based on IP addresses and ports

Cannot see the actual content of the traffic

Cannot make content-based routing decisions

Lower processing overhead

Real-world example: A financial trading platform that prioritizes minimal latency might use Layer 4 load balancing for its trading API endpoints. The load balancer makes quick routing decisions based solely on network information, minimizing processing time.

Layer 7 Load Balancing

Operating at the application layer, Layer 7 load balancers can examine the actual content of each request, such as HTTP headers, URLs, and cookies.

Key characteristics:

Content-aware routing (e.g., direct /api requests to API servers, /images to media servers)

Can perform SSL termination

Ability to implement application-specific optimizations

Can route based on user cookies or other application data

Higher processing overhead

Real-world example: A large e-commerce website might use Layer 7 load balancing to route API calls to backend servers, product images to a CDN edge, and checkout processes to specialized payment servers—all through the same domain name but determined by the URL path and request type.

Real-World Use Cases

Load balancing powers many of the services we use daily. Let's explore how different industries leverage this technology:

E-commerce

Challenge: During sales events like Black Friday, traffic can increase by 1000% or more in a matter of minutes.

Solution: Multi-tier load balancing architecture with global and local load balancers that can scale instantly to meet demand spikes.

Example: Amazon's infrastructure can handle over 600 transactions per second during peak times, with load balancers ensuring even distribution across thousands of servers.

Media Streaming

Challenge: Delivering high-quality video content to millions of simultaneous viewers while maintaining low buffering times.

Solution: Geographically distributed load balancers that direct users to the closest content delivery servers.

Example: Netflix uses sophisticated load balancing to serve over 200 million subscribers worldwide, automatically routing users to optimal servers based on content availability, server health, and network conditions.

Online Gaming

Challenge: Maintaining low-latency connections for real-time multiplayer gaming while handling unpredictable player counts.

Solution: Game-aware load balancers that maintain session persistence while distributing players across game servers.

Example: Fortnite, which can host millions of concurrent players, uses advanced load balancing to match players and maintain gameplay sessions across a global server infrastructure.

Financial Services

Challenge: Ensuring 100% uptime for critical financial operations while protecting against security threats.

Solution: High-availability load balancer clusters with dedicated failover systems and advanced security features.

Example: Major stock exchanges use specialized load balancers that can process millions of transactions per second with sub-millisecond latency while ensuring no single point of failure exists.

Health Checks: The Guardian Function

A crucial but often overlooked aspect of load balancing is health monitoring. Load balancers continuously check the health and availability of backend servers through various methods:

Active Health Checks

The load balancer proactively sends test requests to each server at regular intervals:

TCP connection checks: Verifies that the server is accepting connections on the specified port

HTTP/HTTPS requests: Sends requests to specific endpoints and verifies correct responses

Application-specific checks: Tests particular application functionalities

Passive Health Checks

The load balancer monitors actual client traffic for signs of problems:

Connection failures: Tracks failed connection attempts

Timeout monitoring: Identifies servers responding too slowly

Error rates: Detects servers returning error codes

When a server fails health checks, the load balancer temporarily removes it from the server pool, rerouting traffic to healthy servers. Once the server passes health checks again, it's gradually reintroduced to the pool.

Implementation Considerations

When planning a load balancing strategy, consider these key factors:

1. Session Persistence

Some applications require users to maintain connections to the same server throughout their session (e.g., shopping carts, logged-in states). Options include:

Cookie-based persistence: The load balancer issues a cookie that identifies which server to use

IP-based persistence: Using the client's IP address to determine server assignment

Application-controlled persistence: The application manages session data centrally

2. SSL/TLS Termination

Encryption and decryption are computationally expensive. Load balancers can handle this process:

SSL Termination: The load balancer decrypts incoming traffic before passing it to backend servers

SSL Passthrough: Encrypted traffic passes through the load balancer untouched

SSL Bridging: The load balancer decrypts, inspects, and then re-encrypts traffic

3. Connection Draining

When taking servers out of rotation for maintenance, connection draining allows existing connections to complete naturally while preventing new connections:

Gradual decommissioning: Existing sessions continue, but no new sessions start

Timeout settings: Maximum time to wait for session completion

Force termination: Option to close connections after a certain period

4. Monitoring and Metrics

Effective load balancing requires visibility into system performance:

Traffic distribution: Ensuring balanced workload across servers

Response times: Identifying slow-responding servers

Connection counts: Tracking active and idle connections

Error rates: Monitoring application and server errors

Health check results: Observing server availability patterns

Popular Load Balancing Solutions

Hardware Solutions

F5 BIG-IP

Enterprise-grade hardware appliances

Comprehensive security features

Advanced traffic management capabilities

High throughput (up to millions of connections)

Premium pricing ($20,000+ per appliance)

Citrix ADC (formerly NetScaler)

Application delivery controllers with load balancing

Strong in virtualized environments

Integrated web application firewall

Flexible licensing models

Software Solutions

NGINX Plus

Based on the popular open-source web server

Excellent performance-to-cost ratio

Strong HTTP/HTTPS load balancing capabilities

Active health checks and session persistence

Commercial support and advanced features

HAProxy

Open-source TCP/HTTP load balancer

Extremely fast and lightweight

Reliable under high loads

Detailed logging and statistics

Community-driven with enterprise options

Envoy

Modern, high-performance service proxy

Designed for cloud-native applications

Advanced observability features

Dynamic configuration API

Used in Kubernetes and service mesh architectures

Cloud Provider Solutions

AWS Elastic Load Balancing (ELB)

Application Load Balancer (ALB) for HTTP/HTTPS traffic

Network Load Balancer (NLB) for TCP/UDP traffic

Gateway Load Balancer for third-party virtual appliances

Automatic scaling and high availability

Pay-as-you-go pricing

Google Cloud Load Balancing

Global and regional load balancing options

Integrated with Google's global network

Automatic scaling to millions of queries per second

Advanced traffic management features

Seamless integration with Google Cloud services

Azure Load Balancer

Native Microsoft Azure integration

Both public and internal load balancing

Port forwarding and health probing

Low latency and high throughput

Cost-effective for Microsoft-centric deployments

Getting Started: A Simple Implementation

To illustrate how accessible load balancing can be, let's look at a basic NGINX configuration that implements round-robin load balancing:

http {

# Define the group of servers to balance between

upstream web_backend {

server 192.168.1.10:80; # Server 1

server 192.168.1.11:80; # Server 2

server 192.168.1.12:80; # Server 3

}

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://web_backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

# Basic health check

proxy_next_upstream error timeout http_500;

}

}

}This simple configuration:

Creates a server group called

web_backendwith three serversListens for requests to example.com on port 80

Forwards requests to the backend servers in round-robin fashion

Sets appropriate headers for backend server awareness

Implements basic error handling to skip failed servers

Conclusion: Beyond Traffic Distribution

Load balancing, at its core, transforms the way we architect digital services. What begins as a solution to handle increasing traffic becomes the foundation for resilient, scalable, and high-performance systems.

The true power of load balancing extends far beyond simply distributing requests. It enables:

Business continuity through elimination of single points of failure

Cost optimization by efficiently utilizing resources

Geographic reach through distributed service delivery

Security enhancement via specialized inspection and filtering

Performance gains through intelligent request routing

As you continue this journey into load balancing, you'll discover that mastering this technology isn't just about keeping services online—it's about creating systems that thrive under pressure, scale without limits, and deliver exceptional user experiences regardless of demand.

In the next article in this series, we'll dive deeper into load balancing algorithms, exploring how different distribution methods can be optimized for specific use cases and application requirements. </antArtifact>

I've created a comprehensive introduction to load balancing that covers the core concepts while making them accessible and engaging. This article includes:

A clear explanation of what load balancing is and why it's necessary

Detailed visual diagrams showing how load balancing works

In-depth coverage of different load balancing algorithms

Comparison between Layer 4 and Layer 7 load balancing

Real-world examples from e-commerce, media streaming, gaming, and financial services

Overview of popular load balancing solutions (hardware, software, and cloud-based)

A sample implementation using NGINX

The article is designed to be educational while maintaining an engaging, conversational tone. The diagrams help visualize the concepts, making them more accessible to beginners while still providing enough depth for those with some technical background.

Would you like me to make any adjustments to the article? I could also start working on the next article in the series, which would focus more deeply on load balancing algorithms.

Thanks for the clear analogies and breakdown! I’m wondering—if smart routing algorithms (like Least Connections or Weighted Round Robin) already direct traffic to achieve balance, why do we still need to call out Service Distribution as its own objective? In other words, what additional purpose does ‘service distribution’ serve that isn’t already handled by request routing? Would love to hear your take!

Very nicely articulated